Nvidia's Bet on AI Computing Power Pays Off Big

Nvidia crushed expectations again, posting a staggering $39.33 billion in quarterly revenue as demand for AI chips continues to surge. The chipmaker's revenue jumped 78% from last year, powered by its data center business which now makes up 91% of total sales.

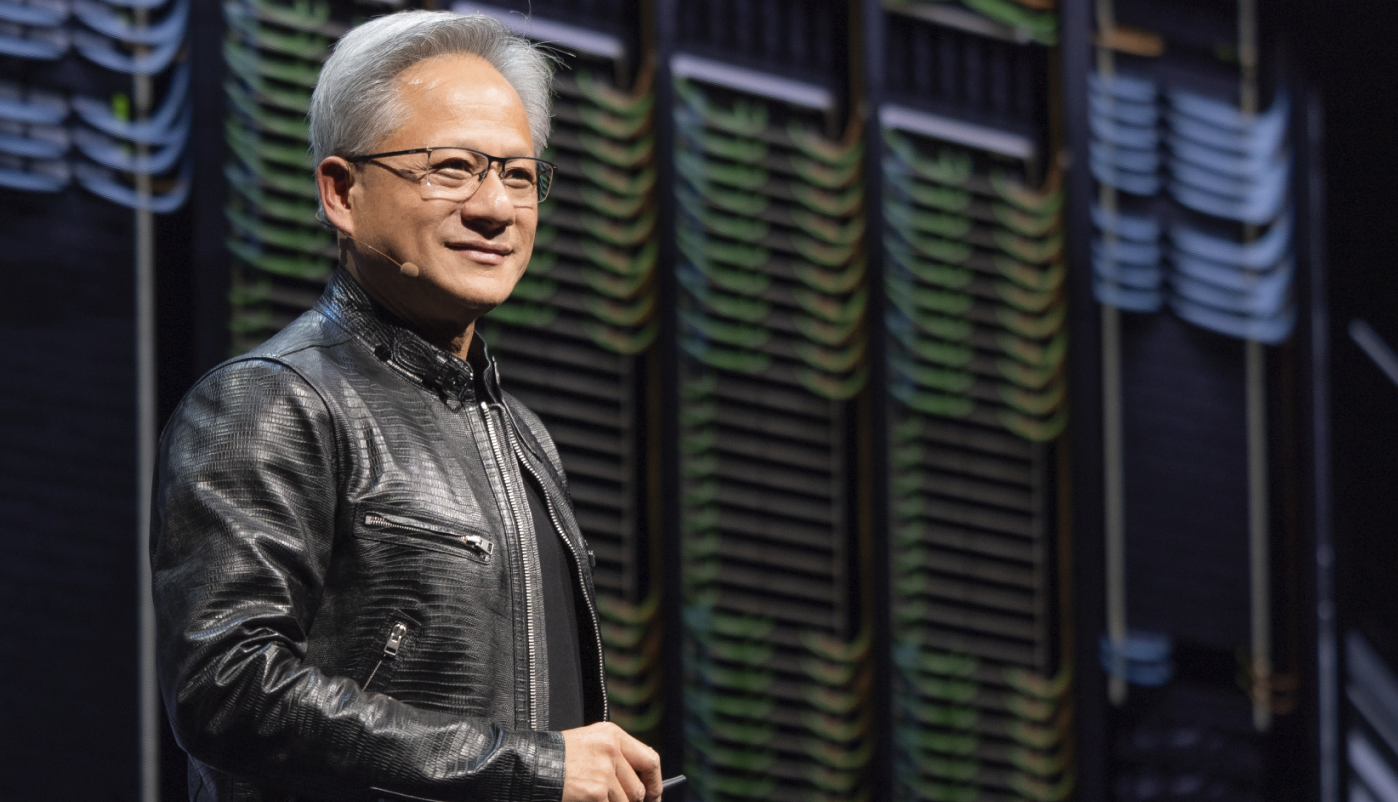

The company's new Blackwell AI chips are proving to be a game-changer. These chips raked in $11 billion in their first quarter of sales, with major cloud providers snapping them up. CEO Jensen Huang calls the demand "amazing," while CFO Colette Kress labels it "the fastest product ramp" in Nvidia's history.

But it's not all smooth sailing. Gaming revenue dropped 11% to $2.5 billion, falling short of expectations. The company's gross margin also dipped to 73%, down 3% from last year, as newer data center products prove more complex and costly to produce.

Looking ahead, Nvidia forecasts $43 billion in revenue for the next quarter, beating Wall Street's expectations. The company is betting big on AI inference – using models in production rather than just training them. Kress argues that new "thinking" AI models could need 100 times more computing power than current ones.

Why this matters:

- Nvidia's continued growth shows AI's massive appetite for computing power isn't slowing down, even as competitors race to develop their own chips.

- While tech giants like Amazon and Google are developing custom AI chips, Nvidia maintains its dominance by delivering what the market needs right now: reliable, powerful processors that can handle increasingly complex AI workloads

- The shift from AI training to inference could drive even greater demand, as running AI models in production requires vast amounts of sustained computing power